Traditional Defence Consultation vs Agentic AI

The emerging debate between traditional Defence Consultation (human-centric, deliberative processes) and the materialisation of Agentic AI (autonomous or semi-autonomous AI systems)

The debate between traditional Defence Consultation (human-centric, deliberative processes) and the materialisation of Agentic AI (autonomous or semi-autonomous AI systems) in military decision-making revolves around core tensions of speed, trust, judgment, and control. However, there are opposing opinions, framed as arguments with their respective pros and cons.

Argument 1: In Favour of Traditional Defence Consultation

This view holds that human judgment, informed by experience, ethics, and context, must remain central to command and operational planning.

PROS:

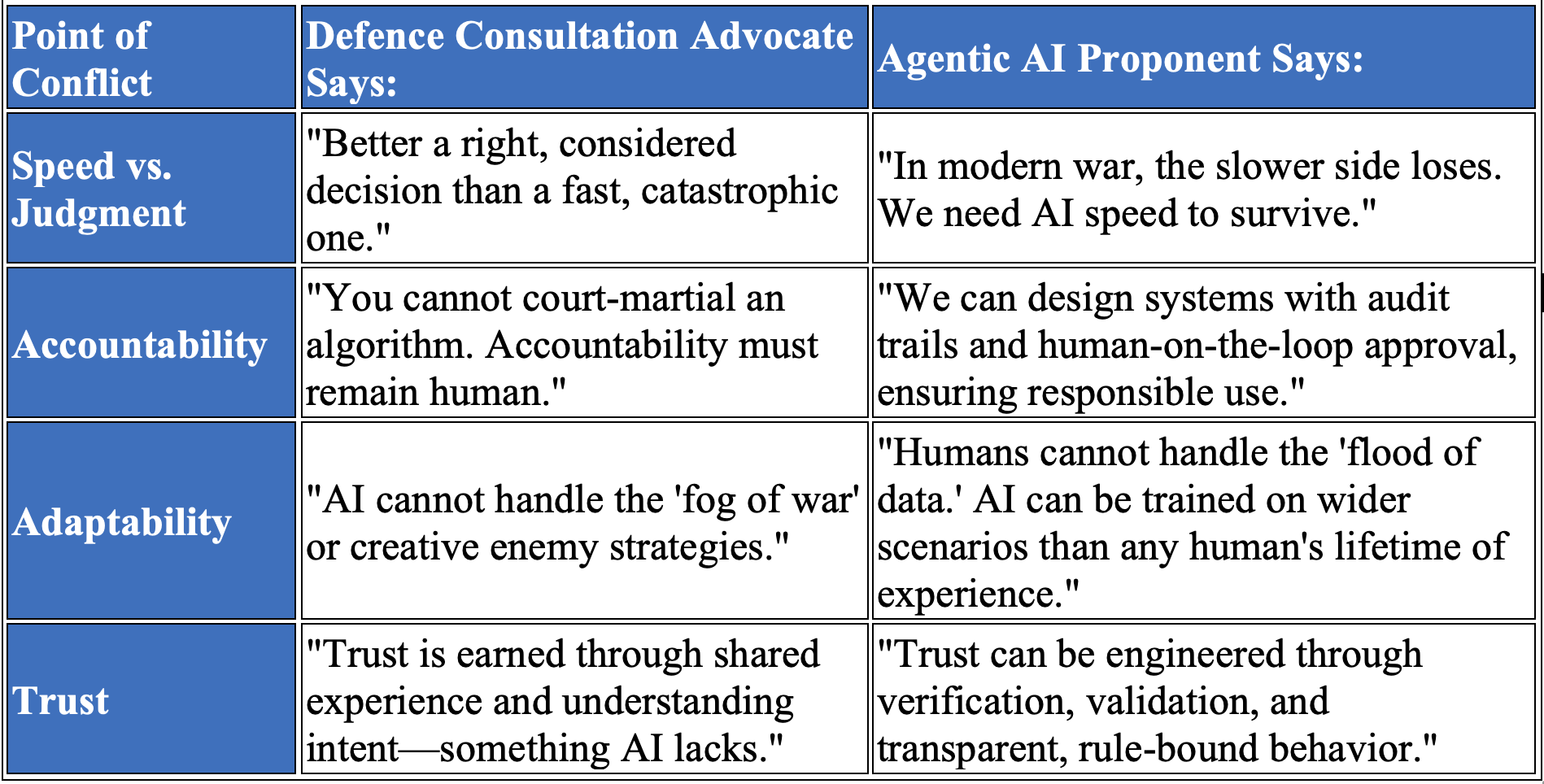

Ethical & Legal Nuance: Humans can interpret complex, ambiguous situations involving International Humanitarian Law (IHL) and Rules of Engagement (ROE) with moral reasoning and an understanding of intent, which AI lacks.

Accountability & Responsibility: Clear human chains of command establish unambiguous legal and moral accountability for decisions, a cornerstone of democratic civil-military (CIMIC) relations.

Strategic Creativity & Intuition: Experienced commanders can employ intuition, strategic creativity, and “fingertip feeling” to devise novel solutions outside pre-programmed AI parameters.

Diplomatic and Political Sensitivity: Human consultation is essential for decisions with diplomatic implications, understanding political nuances, alliance dynamics, and second- and third-order effects.

Robustness Against Deception: Humans, while fallible, can be more sceptical of data and recognise sophisticated enemy deception or cyber-spoofing campaigns targeting AI sensors and data streams.

CONS:

Cognitive & Temporal Limitations: Human brains are slow to process vast, multi-domain data, leading to "information overload" and slower OODA (Observe, Orient, Decide, Act) loops compared to AI.

Bias and Subjectivity: Human judgment is subject to personal biases, groupthink, fatigue, emotional and battle stress, which can lead to catastrophic errors.

Scalability Issues: High-level human expertise is a scarce resource. It cannot be scaled instantly to monitor thousands of simultaneous threats or coordinate complex multi-domain operations in real-time.

Inconsistency: Decisions can vary based on individual commanders, shifts, or teams, and their respective knowledge, training, experience, and access to information, leading to inconsistent application of doctrine and policy.

Argument 2: In Favour of Agentic AI Materialisation

This view argues that AI agents are necessary to manage the complexity, speed, and data volume of modern warfare, acting as indispensable force multipliers.

PROS:

Unmatched Speed & Scale: AI can analyse petabytes of data from satellites, sensors, and signals in seconds, identifying patterns and threats impossible for humans to see in time.

Consistent Application of Rules: An AI Agent, properly constrained by a formalised CONOPS, applies doctrinal rules, ROE, and policy thresholds with perfect consistency, without fatigue or emotion biases.

Enhanced Situational Awareness: By fusing multimodal and disparate data sources, AI can provide a comprehensive, real-time Common Operational Picture (COP), offering a decision advantage.

24/7 Operational Readiness: AI systems do not need rest, maintaining constant vigilance and relieving human operators of monotonous, lengthy monitoring tasks.

Tactical Optimisation: AI can rapidly generate and evaluate millions of Courses of Action (COAs) for resource allocation, logistics, targeting, and engagement, optimising for variables like operational efficiency, survivability, mutual interference and collateral damage avoidance.

CONS:

The "Black Box" Problem: Many advanced AI models lack adequate explainability. A commander may not understand why an AI recommended a specific action, eroding trust and complicating accountability.

Brittleness and Unpredictability: AI can fail catastrophically when faced with novel ("out-of-distribution") scenarios not covered in its training data or CONOPS rules, potentially leading to unintended escalation or fratricide.

Vulnerability to Technical Exploitation: AI systems are significantly vulnerable to adversarial attacks (e.g., data poisoning, spoofing sensors, prompt injection on LLMs), creating new critical vulnerabilities.

Ethical Deskilling & Responsibility Decline: Over-reliance on AI could erode human commanders' critical thinking skills, and allow for the diffusion of responsibility ("the AI made me do it"), undermining accountability.

Escalation Risks: The speed and perceived objectivity of AI-driven actions could accelerate conflict cycles, leaving less time for human deliberation and diplomatic de-escalation.

Core Tension Points Summarised

Emerging Consensus / Hybrid Stance:

Most modern defence thinkers do not see this as a binary choice but advocate for a human-machine teaming or human-in-the-loop model. The human element desperately needs the non-biased Explainable AI to interact with, though. Here, Agentic AI materialisations are viewed as powerful consultants—actually, the most capable staff officers imaginable—that augment, always explain the “why”, make doctrinal references, not replace, but interact with Defence Consultation. The goal is to leverage AI's PROS (speed, data analysis, consistency) while mitigating its CONS through ironclad/ overriding human oversight, explicit ethical constraints (mandatory to be embedded in the CONOPS), and robust technical safeguards. The ultimate challenge is designing a system that truly embeds the commander's intent and legal boundaries, making the AI a faithful and subordinate extension of human command.

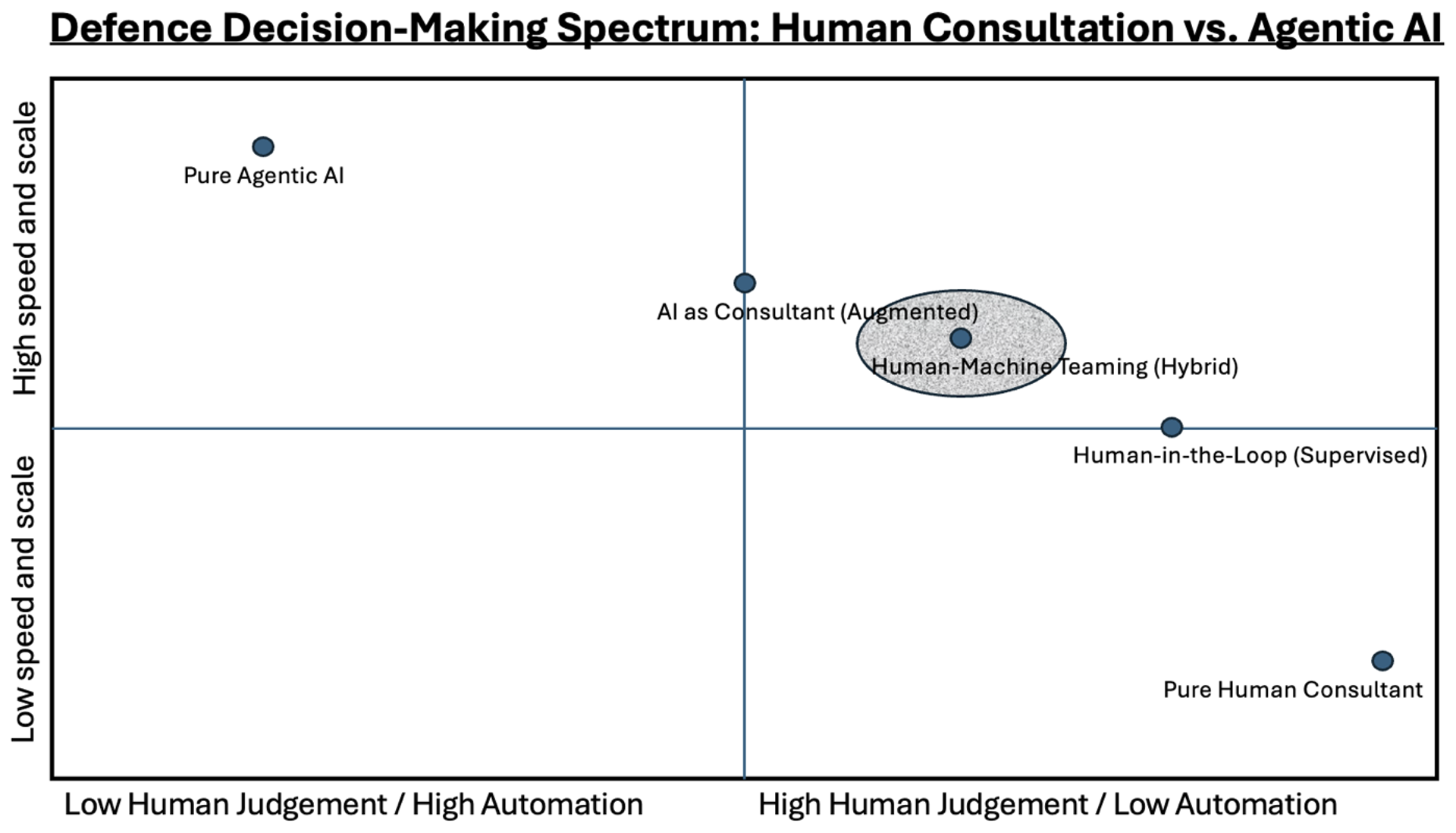

How to Interpret This Diagram:

The Axes:

X-Axis (Human Judgment ⇄ Automation): Represents the degree of human cognitive involvement vs. algorithmic execution.

Y-Axis (Speed & Scale): Represents the operational capacity for processing data and executing decisions.

The Five Conceptual Positions:

Pure Human Consultation: High judgment, low speed. The traditional model of deliberative councils and command chains.

Pure Agentic AI: High speed, low human judgment. A fully autonomous, rules-bound system—largely theoretical and highly controversial in defence.

Human-in-the-Loop: Human judgment dominates, but uses AI for data processing and option generation. The human approves every significant action.

AI as Consultant (Augmented): AI actively recommends COAs and flags risks, but the human commander is the primary decision-maker in a collaborative dialogue.

Human-Machine Teaming (Hybrid - The Synthesis): The emerging ideal. A symbiotic partnership where roles are dynamically allocated. The AI handles vast data analysis and rapid constraint checking, while the human provides intent, ethical oversight, and handles novel, ambiguous situations. This is the "mission-aware agent" embedded with the CONOPS.

The Central Tension (Arrow):

The fundamental trade-off is between unmatched speed & scale and nuanced human judgment & accountability. Moving towards greater automation sacrifices some direct human control for operational tempo.The Synthesis Zone (Shaded Area):

This represents the viable, responsible design space for modern defence systems. It balances the pros of both sides while actively mitigating the cons:Leverages AI's speed and consistency.

Preserves human ethical judgment, accountability, and creativity.

Requires robust technical governance (explainable AI, secure systems) and evolved human training (understanding AI capabilities/limits).

In essence, the debate is not about choosing a side, but about finding the optimal point in this spectrum for specific mission contexts. The goal of "embedding the CONOPS" in an AI Agent is precisely to anchor the system firmly in the Synthesis Zone, ensuring that any agentic automation is tightly constrained by and accountable to human-defined operational concepts, ethics, and command intent.

The Explainable AI (XAI) context

The Explainable AI (XAI) represents a critical enabling layer, but not the complete solution. Here’s why, broken down into why it’s necessary, why it’s insufficient, and what else is required.

Why Explainable AI (XAI) is necessary: The Critical Foundation

An XAI agent bound to the CONOPS directly addresses major criticisms of "black box" AI:

Builds Trust via Transparency: By providing precise references to the CONOPS, ROE, or legal corpus for every recommendation, it allows a human commander to audit the AI's "chain of thought." This moves trust from blind faith to verifiable justification.

Anchors Accountability: It creates a clear link between the agent's output and the human-authored CONOPS. The responsibility for the rules lies with the command that wrote the CONOPS; the responsibility for acting on the AI's recommendation remains with the human operator.

Enables Effective Human Oversight: Explainability is a prerequisite for meaningful human-in-the-loop control. A commander cannot reasonably override or approve a suggestion they don't understand.

Why Explainable AI (XAI) is insufficient alone: The Persistent Gaps

The CONOPS Itself May Be Incomplete or Ambiguous.

Problem: No CONOPS can pre-script every possible scenario in war. Ambiguity, "fog of war," and novel enemy tactics create gaps. An AI strictly bound to the letter of the CONOPS may be brittle or silent in these gaps, whereas human judgment can apply intent and analogy.

Example: The CONOPS may not explicitly address a hybrid threat that uses civilian digital infrastructure in a novel way. An XAI agent might correctly state it has "no applicable rule," but a human commander must still decide.

Explainability ≠ Understanding or Wisdom.

Problem: An AI can generate a technically accurate justification traceable to the CONOPS without possessing true comprehension of the moral, political, or strategic second-order effects.

Example: An XAI might propose a legally compliant strike with minimal collateral damage, citing the relevant ROE. It cannot, however, weigh the potential political backlash from that strike in a sensitive region—a factor a human commander must consider.

Risk of "Automation Bias" or "Explanation Blindness".

Problem: Well-presented, authoritative explanations can lead to over-trust. A human, under stress or time pressure, may defer to a convincingly explained AI recommendation without probing its underlying assumptions or the completeness of the CONOPS.

Example: "The AI recommended it and cited three CONOPS paragraphs, so it must be correct." This can erode the very critical engagement it's designed to foster.

It Doesn't Solve the Integration Challenge.

Problem: A perfectly explainable agent is useless if it cannot access secure, real-time data feeds from C2 systems, or if its recommendations are presented in a way that disrupts, rather than enhances, the commander's workflow.

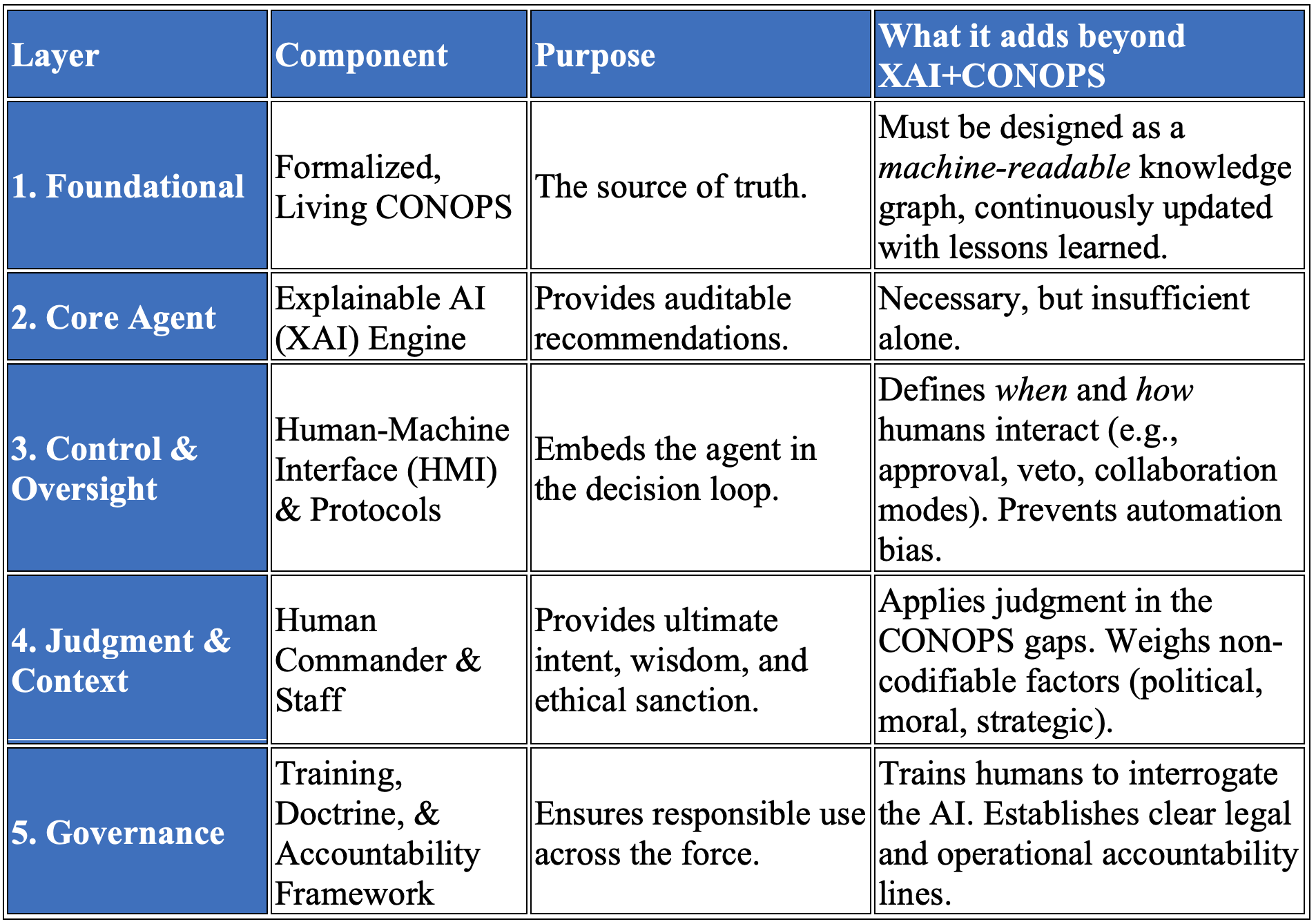

The required complete solution: A Multi-Layer Architecture

For the agent to be sufficient, it must be part of a broader human-machine teaming ecosystem. Here’s what must surround the XAI + CONOPS core:

What it adds beyond XAI + CONOPS

CONCLUSION: The "Three-Legged Stool"

An Explainable AI (XAI) Agent with CONOPS references is a vital leg, but the stool collapses without the other two:

1. Leg 1: Technologically Robust & Constrained Agent (The XAI+CONOPS agent).

2. Leg 2: Contextually Expert Human (Trained, skeptical, empowered to override).

3. Leg 3: Resilient Organisational Process (Clear protocols, updated CONOPS, continuous evaluation).

Therefore, while such an agent is a necessary precondition for trustworthy Agentic AI in defence, it is only sufficient when deeply embedded within a mature system of human oversight, adaptive doctrine, and rigorous governance. The goal is not to create an AI that replaces consultation, but one that enhances it, making the human decision-maker more informed, faster, and more consistent, while never abdicating their ultimate judgement.